Ryan F9 has become a YouTube sensation thanks to his wit, no-nonsense on-camera presence, and entertaining video montages. I appreciate the artistry and dialogue in his videos but take issue with inaccuracies in some of them.

Contrary to how he presents himself, Ryan isn’t a helmet safety expert. Very few people are. Specifically, I notice that he doesn’t understand the 65-year old Snell Memorial Foundation’s methods and testing standards. Neither has Ryan fully grasped the intricacies of ECE 22.05 or the new 22.06 standard.

I’ve chosen not to explore the FIM standard in much detail in this piece to avoid further confusion.

Full Disclosure: I’m Not An Expert Either

I admit that I still don’t understand everything about the standards the way true industry experts do, but I’m familiar enough with them to call out some of the misinformation being spread around the world wide web. That is my goal in writing this article.

This is heavy, technical stuff to digest, and while figuratively putting pen to paper I spoke directly to the experts at The Snell Memorial Foundation before publishing (and also spent weeks pouring over the ECE and Snell standards).

Unfortunately, I didn’t have any ECE experts to consult with—but I’m keen to connect with some.

I also spoke with representatives from MIPS to get their input too. Read on for more.

The Issue at Hand: How Meaningful is Snell M2020?

On July 26, 2021, I watched a YouTube video from Ryan Kluftinger, (aka Ryan F9 of FortNine fame) and read a subsequent article written by Bradley Brownell which echoed the video about the Snell M2020 homologation.

This “new” Snell standard was first shared with the world back in late 2019 and shouldn’t have been news to anyone by mid-2021.

Don’t Believe the Haters

Both pieces aired on the same day and got plenty of clicks and applause because the video and article were presented as if a great secret had just been unearthed. These two pieces of media (coupled with confusion among the public about helmet safety standards) have led to some motorcyclists mistakenly believing Snell-approved helmets should be avoided because they won’t protect properly in a crash (particularly in low-speed crashes, which are more commonplace).

Essentially, Ryan and Brad claim that the Snell standard is now meaningless because there are two versions of M2020: M2020D for North America (DOT) and M2020R (ECE) everywhere else.

mean·ing·less

/ˈmēniNGləs/

adjective

- having no meaning or significance.

“the paragraph was a jumble of meaningless words”

Similar: unintelligible, incomprehensible, incoherent, illogical, senseless, unmeaning, foolish, silly.

“rules are meaningless to a child if they do not have a rationale.”

Calling Snell M2020 Meaningless is Utter Nonsense

To quote the great John Cleese from a recent interview with Bill Maher: “What I realize at 75 (years old) now, is that almost nobody has any idea what they’re talking about.”

The Trouble with Influencers

In his video, Ryan F9 primarily resurrected out-of-date attacks made against the Snell M2008 standard by Dexter Ford back in 2009. It’s true there were some legitimate shortcomings in that old Snell standard, but those valid points from 14 years (and 3 generations of Snell standards) ago have since been addressed. Dexter Ford’s Blowing The Lid Off Snell article isn’t relevant to M2020.

Some of the points mentioned in Ryan’s video that I’ll address in this article include:

- Snell shells are too hard to protect the wearer in lower velocity impacts

- Snell wants to hit helmets unrealistically hard during their testing

- It’s impossible to differentiate between an M2020D and M2020R helmet

- Snell testing requires two impacts (redundant) to the same location on the helmet

- Snell testing doesn’t allow helmets to freefall onto the anvil test surface as ECE does

- Snell doesn’t do oblique testing (impacts to an angled surface) as ECE does

- Snell created M2020R just to keep money flowing their way

Soft ECE vs. Hard Snell Shells

Critics of Snell standards have said that helmets need to move towards having a softer, more flexible shell. ECE 22.05 (and now 22.06) says those shells better absorb energy in impacts on the flat and kerbstone anvils they test on.

A Peach in a Cocktail Shaker?

Ryan champions this idea by comparing people’s heads housed inside Snell-approved harder shells to a peach rattling around inside a steel cocktail shaker—when in reality, Snell shells flex when struck and are hardly made of steel.

Are Snell’s harder shells truly too stiff, or is this a widely-held misunderstanding?

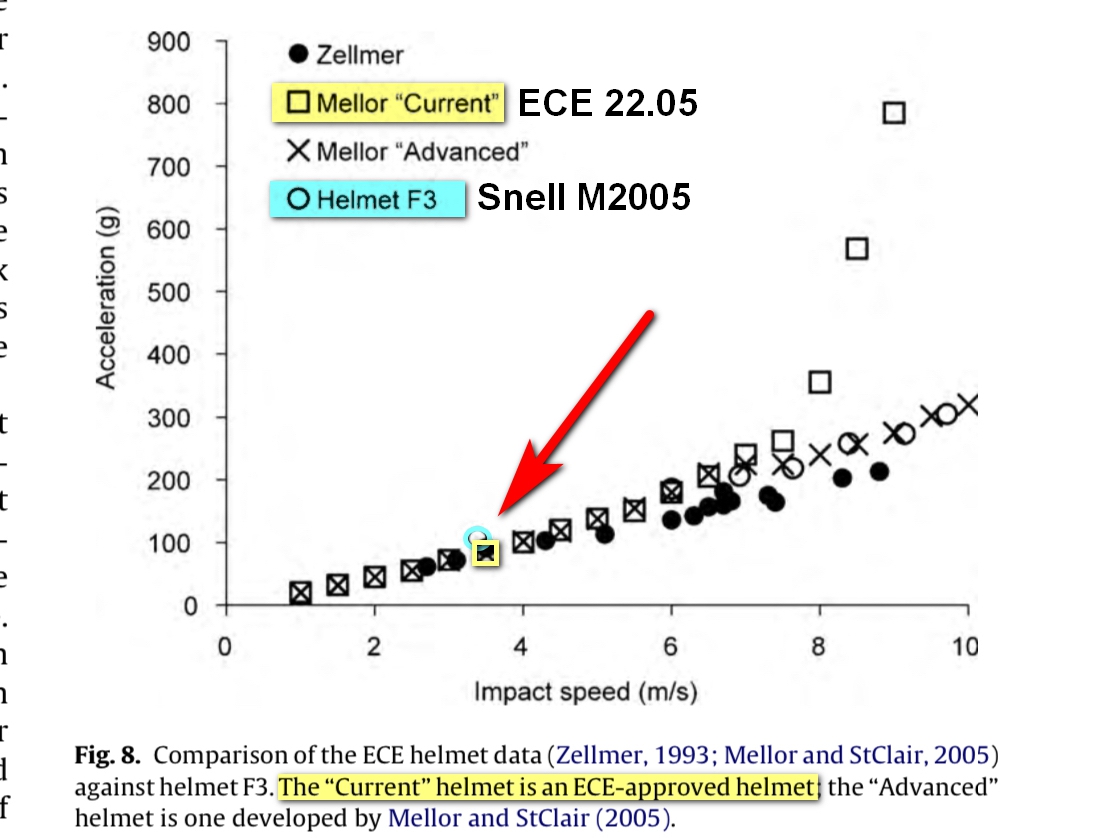

Check out this graph, which was referenced in a study done in 2009 called The impact response of motorcycle helmets at different impact severities.

I traced a blue circle around the Snell M2005 approved helmet and a yellow square around the plot for the ECE 22.05 approved helmet at the low-velocity testing mark of 3.9 m/s. You can see the forces measured overlap because they’re virtually identical at just under 100 G.

These results are from crash tests performed on flat anvils, which is a normal requirement in both Snell and ECE testing. This myth has just been busted.

Why the Snell Standards Seem Widely Misunderstood

Why do so many people like Ryan and Bradley believe Snell-approved shells are dangerously hard and will damage your peach—uh, head—in a low-speed crash?

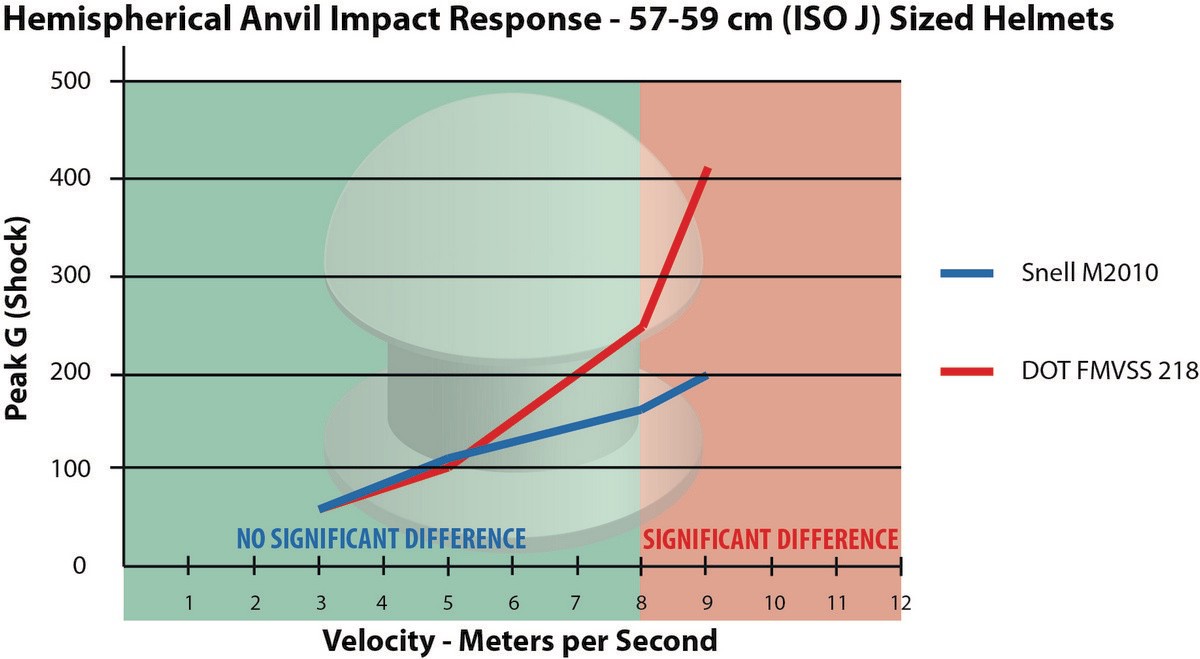

Ryan’s correct in saying Snell punches more force into the helmet shells during their impact testing because they use hemispherical-shaped anvils. Having said that, he’s mistaken about this equating injury for the wearer or Snell’s standards being unrealistic.

Let me explain.

Smaller Surface Area = Greater Penetration/Force Transfer

Both ECE and Snell require the same energy measurements (less than 275 Gs) even at higher velocities (8.2 m/s for the new ECE 22.06 standard). But they use different anvils and velocities in their testing.

Velocity

Believe it or not, ECE is smashing helmets onto anvils at a higher speed than Snell is. Snell’s maximum helmet speed is maxed at 7.9 m/s on an edge anvil.

But there’s a lot more to this than just the test helmet’s velocity. As mentioned above, the anvil shape really matters.

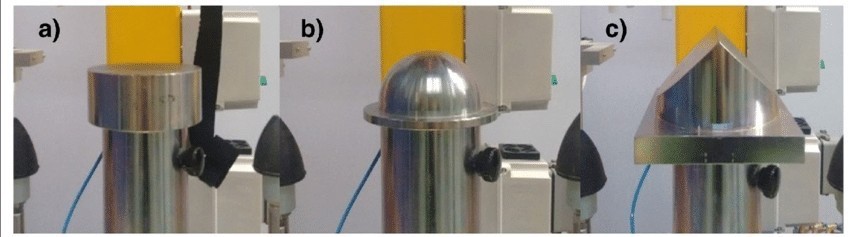

Snell testing anvils (above) from left to right are flat, hemispherical, and edge—although the current edge anvil they use doesn’t have a sharp edge, as shown in this photo. It’s a dull one now.

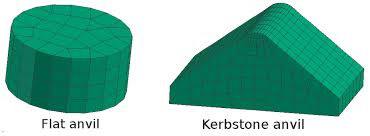

Here (below) are the two ECE testing anvils: flat and kerbstone.

Both ECE anvils have fairly large surface areas on them, which spreads out the force of the impact on the shell—allowing it to disperse the energy more than an anvil with a smaller surface area would.

The Hemi Anvil

The Snell hemispherical anvil shows no mercy to the helmet because the force of that falling helmet and testing head inside is focused on a smaller point of impact. A rounded anvil impacting a rounded helmet shell is more devastating at 7.9 m/s than on an elongated kerbstone edge. According to Snell, that’s partly why ECE doesn’t use the hemispherical anvil. Their softer shells can’t disperse the energy enough to achieve the <275 G standard they and Snell insist upon.

Snell seems to be asking a lot more from their helmets in this regard than ECE does, which bodes well for anyone’s head housed inside a Snell-approved helmet if they crash and hit their head on a rounded object. I’m not saying ECE isn’t a good standard. I have no issue encouraging anyone to wear an ECE-rated helmet, but there’s a difference.

Have a look at this video I filmed at the Arai Factory in Omiya, Japan in 2019, while they were showing me some impact testing in their lab using the hemispherical and flat anvils.

Hit It Again, Snell!

This brings me to Ryan’s point about Snell’s insistence on striking the same place on a helmet twice being redundant and/or inappropriate for motorcycle applications. He says it’s a mislocated test from the automotive racing industry where crash bars are a threat to drivers and not “real world” for motorcyclists.

Speaking as an avid adventure rider, I’ll have to disagree.

I took this photo while riding in South Dakota during the 2021 GET ON! Adventure Fest Rally with my friend Brian. He fell while climbing this relatively steep hill and pinned his leg between the bike and the rocks, subsequently breaking his ankle. We were waiting for EMS to arrive and get him out of there at that moment.

Look at the size and shape of rocks on this hill and ask yourself which helmet safety standard would hold up better smashing down on this terrain. In my opinion, the hemispherical anvil best resembles the nastiest rocks in the photo, although many are shaped like the ECE kerbstone too.

A Second Fall

If Brian had smacked his head on the rocks (instead of breaking his ankle) and the helmet did its job protecting him, he would be free to carry on with the Rally ride, right? What happens if he falls again and hits his head in the same location during the rest of the ride? Is it likely to happen? Probably the chances are slim, but not impossible.

What about a different scenario where Brian falls backward off the bike and tumbles down the hill uncontrolled? I’d say there’s a higher probability of striking the same spot (or very close to it) twice in that case.

ECE vs. Hemispherical Anvils

The team at Snell tell me that an ECE-approved helmet will be totally overcome by the energy from a hemispherical anvil strike based on their testing history. Is this the truth or just Snell’s bias manifesting as belief? They didn’t show me proof of this claim, so I can’t be sure. I would venture to guess that a good quality ECE helmet from a reputable builder probably could take one good hit on rocks or a rounded anvil, but twice might not be so nice.

I do have data to show how a DOT helmet compares against a Snell helmet when striking the hemispherical anvil seen in the image below.

Replace Your Helmet

We all know that helmets are supposed to be replaced after being in a crash, but some people are hesitant to do this because of the “it seems fine to me” attitude. There’s also the cost of replacement coming into the equation.

In a case like Brian’s above, he wouldn’t have been able to replace the helmet until he got out of the backcountry, so he would be forced to continue riding with a compromised helmet for at least a short time.

This is why Snell justifies continuing to hit helmets twice in the same spot during their M2020D testing. Protecting people from their own worst enemy sure makes sense to me.

M2020R Single Impacts Only

Snell has removed the double hits from their M2020R testing to better align with ECE testing. Ryan recognizes this change and jumps on Snell for doing what their critics have told them to do (i.e., be more like ECE) by saying: “If the name can mean 2 different things, does it mean anything?”

Yes, something can be two different things and still have meaning or significance. For example, we already have at least two different versions of the same helmets in the world thanks to DOT and ECE being so different while also being government-mandated standards.

A Shoei Neotec II in Paris, France will be ECE only, while one in Dallas, Texas will be DOT only. Both are still the same good quality helmet, but the construction has to be slightly altered to pass DOT vs ECE. Many people don’t realize this is the case but ask a manufacturer and they’ll confirm it.

Some helmets can pass both DOT and ECE without modifications, and you’ll see that on the back of the safety certificate stickers.

Mandatory vs. Voluntary Certification

Just to clarify– DOT is a mandatory certification for helmets used in the United States only. ECE isn’t recognized in the US at this time.

ECE is the equivalent mandatory certification in Europe where DOT isn’t recognized.

Snell certification isn’t mandatory anywhere. It’s an enhanced or stricter certification standard for helmets from all over the world to achieve. Think of it as an elite level of safety performance indicator.

FIM is kind of a European equivalent of Snell in that it too is a voluntary enhanced standard for manufacturers to achieve. FIM was specifically made for racing applications while Snell wasn’t, although for a long time Snell was the required certification for racing helmets.

The ECE “Free Fall” Advantage

Ryan praises the way ECE testing allows helmets to freefall onto anvils during their testing to better imitate a real crash while vilifying Snell for using a guided rail fall system.

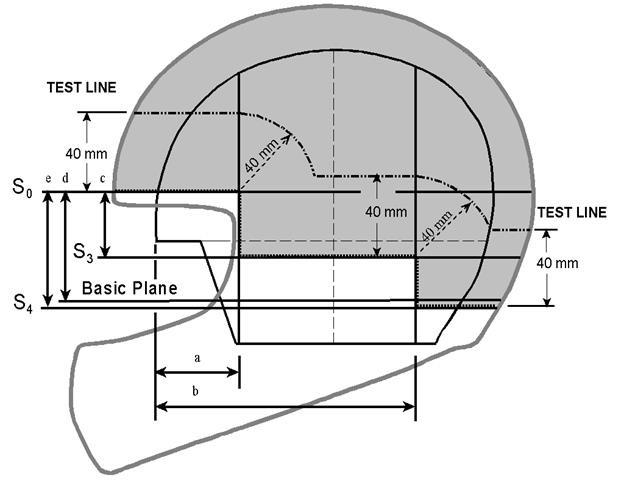

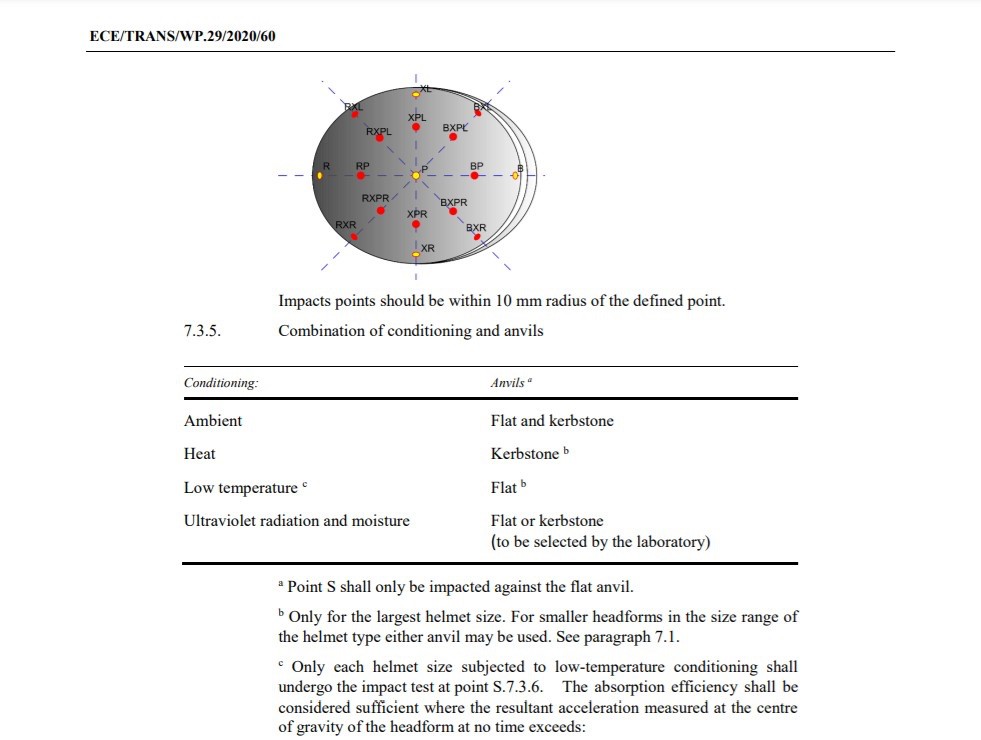

The two apparatuses are different, but the ECE system doesn’t let the helmet fall uncontrolled because the regulations stipulate that all impacts must hit within a 10mm radius of 12 specific targeted points (the red dots) on the helmet—as outlined in the image below.

How could the ECE test be that accurate if a helmet was truly unguided and allowed to free-fall onto the anvil while attaining a velocity of 8.2 m/s? The testing technician would need to be a world champion Plinko player to manage that.

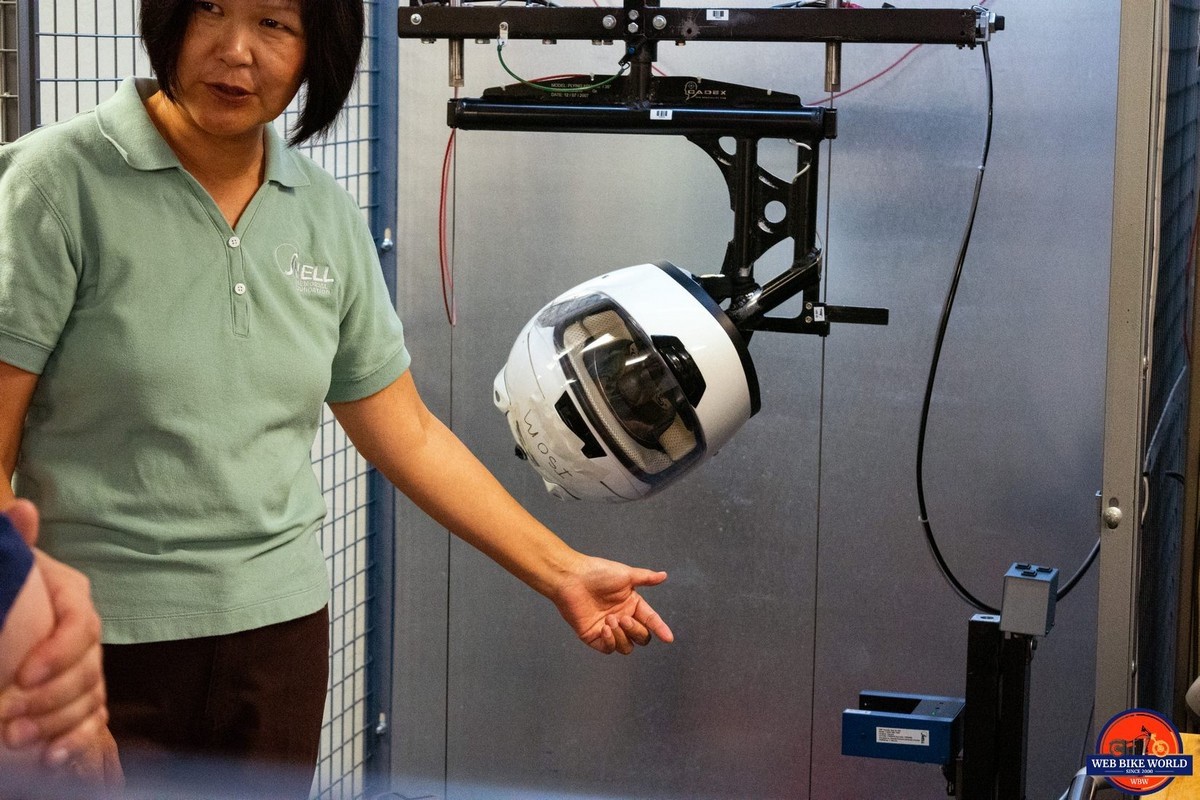

The way ECE testers accurately hit those marks is by using a large ring-shaped bracket that the helmet sits cradled inside with the target area facing down and exposed to the striking surface of the anvil (as seen in the images above and below).

The ring bracket mouth is wide enough to allow the anvil to pass through and strike the helmet as it falls, GUIDED by the arm that the ring bracket is attached to. The bracket and helmet fall together until striking the anvil to limit unwanted rotation of the helmet or let it wander off-target—so no separation or actual free fall is happening until the helmet impacts the test anvil.

You can watch the full EVO Helmets video I took these photos from to see it in action, and then compare it to the other video I shot at the Arai factory showing the Snell apparatus at work.

Snell vs. ECE: Six of One or Half a Dozen of the Other?

How is this ECE method creating a more accurate real-world simulation as Ryan claims? All I can come up with is that the helmet can bounce freely off the anvil after striking it, but is that truly valuable?

To my thinking, the only real-world scenario this ECE system best replicates is how a decapitated head would act in a crash scenario, indoors… in a laboratory… in a completely controlled environment with no motorcycles around.

I don’t think the Snell system reflects a real-world scenario either, for that matter, because all this testing needs to be clinical in order to be consistent, controlled, and repeatable. In a nutshell, they’re just trying to introduce a measured amount of energy into the helmets to ensure enough of it gets absorbed or deflected away from the wearer’s brain. That’s it.

Why Snell Testing is More Thorough

Snell testing technicians have complete freedom to choose what location on any helmet will be crash-tested above a specified testing line—shown in the diagram below as the shaded area.

Their techs are trained to purposely target areas they suspect are weaker, while the ECE tech has no such option.

In short, Snell is trying to find a weak spot to make the helmet fail the test, while an ECE tech is strictly hitting predetermined targets.

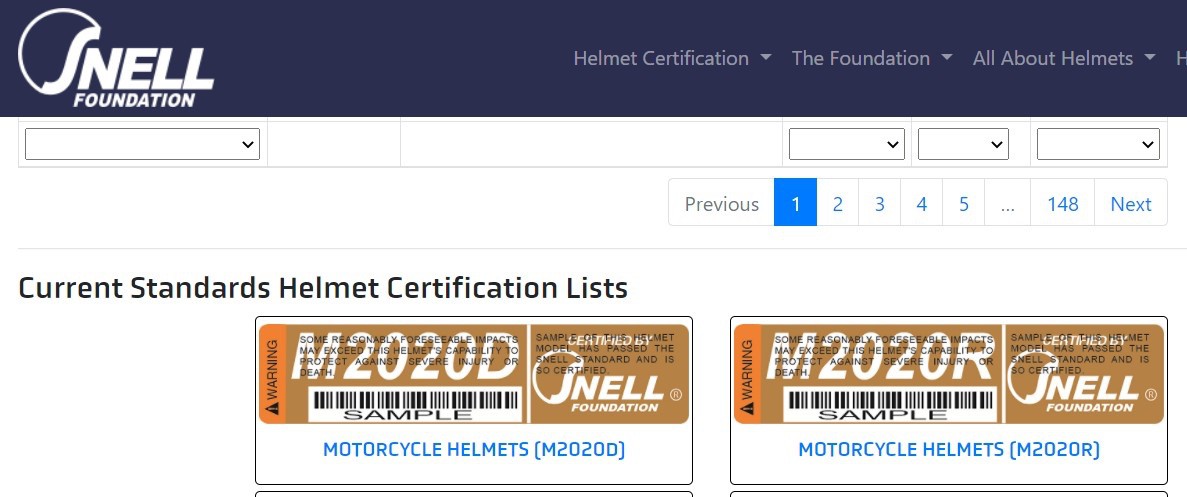

Which Is It? M2020R or M2020D?

Ryan also mistakenly proclaims that it will be impossible to tell the difference between a Snell M2020D helmet built for the North American market and an M2020R helmet sold in other parts of the world because the “same sticker will be on both”.

In response to that, I’ll share the photo below, which was easily found on Snell’s website.

Every M2020 Snell-approved helmet has one of these stickers on the inside, behind the comfort liner, to indicate compliance with the standard. There’s even a unique serial number on the sticker assigned to that individual helmet.

No Snell Oblique Testing

Here’s one of the most confusing areas of helmet testing, and for good reason.

Unbeknownst to most people, Snell is working independently to clarify and validate this oblique testing. Contrary to what Ryan infers in the video, Snell recognizes the danger of rotational forces on the brain, and they’re searching for ways to help protect motorcyclists from it.

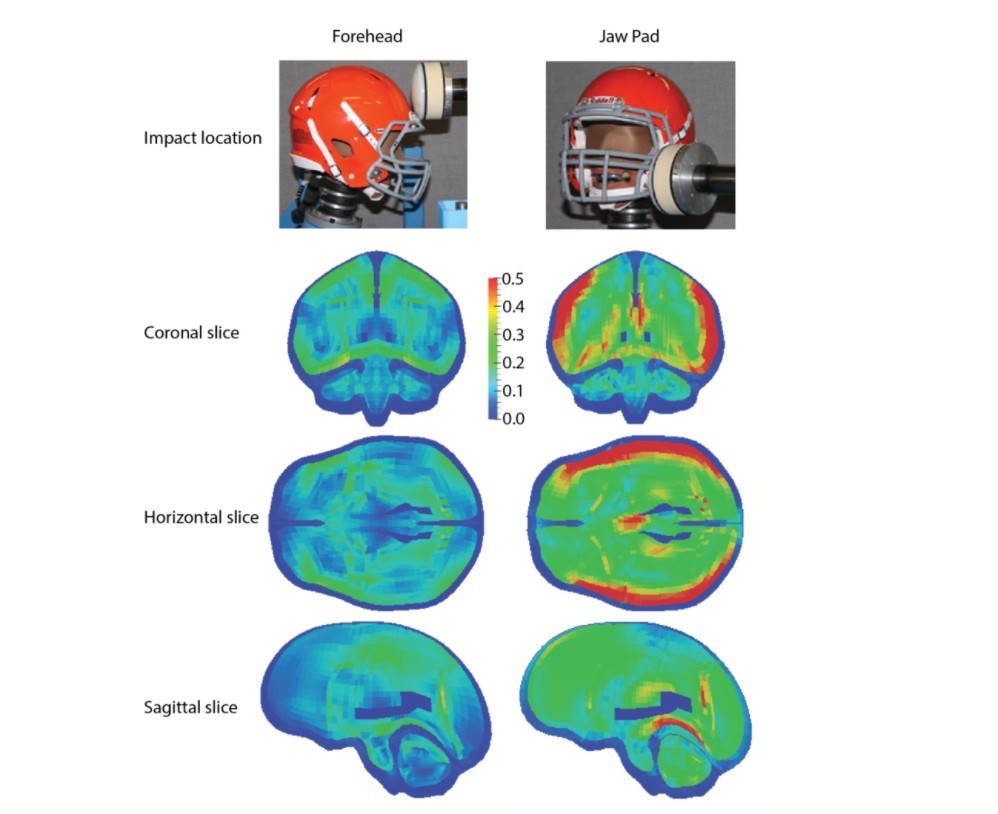

The Dangers of Rotational Energy

What is rotational energy? Think about how boxers get knocked out when they’re struck on the chin with a punch. The rapid twisting of the head that follows is what makes the brain shut down and take damage. See the photo below to illustrate how trauma is felt in the brain during these types of impacts.

Two Kinds of Tests

There are two kinds of oblique testing, as it turns out.

In the old ECE 22.05 criteria and current SHARP testing, a helmet is dropped in such a way as to produce a glancing blow against a surface covered in rough 80 grit sandpaper that’s angled at 145 degrees. The idea is to measure the degree of friction (or amount of drag, basically) the helmet experiences in this scenario using sensors placed inside the angled landing pad.

That’s old news, though. ECE 22.06 and FIM have developed much more sophisticated tests for measuring these deadly rotational forces.

In this new oblique motorcycle helmet testing, the helmet still gets dropped at a velocity of 8.5 m/s on a 45 degree angled anvil with the sandpaper covering, but now fancy sensors are placed inside the helmet headform to measure the amount of rotational force felt inside the brain during a crash.

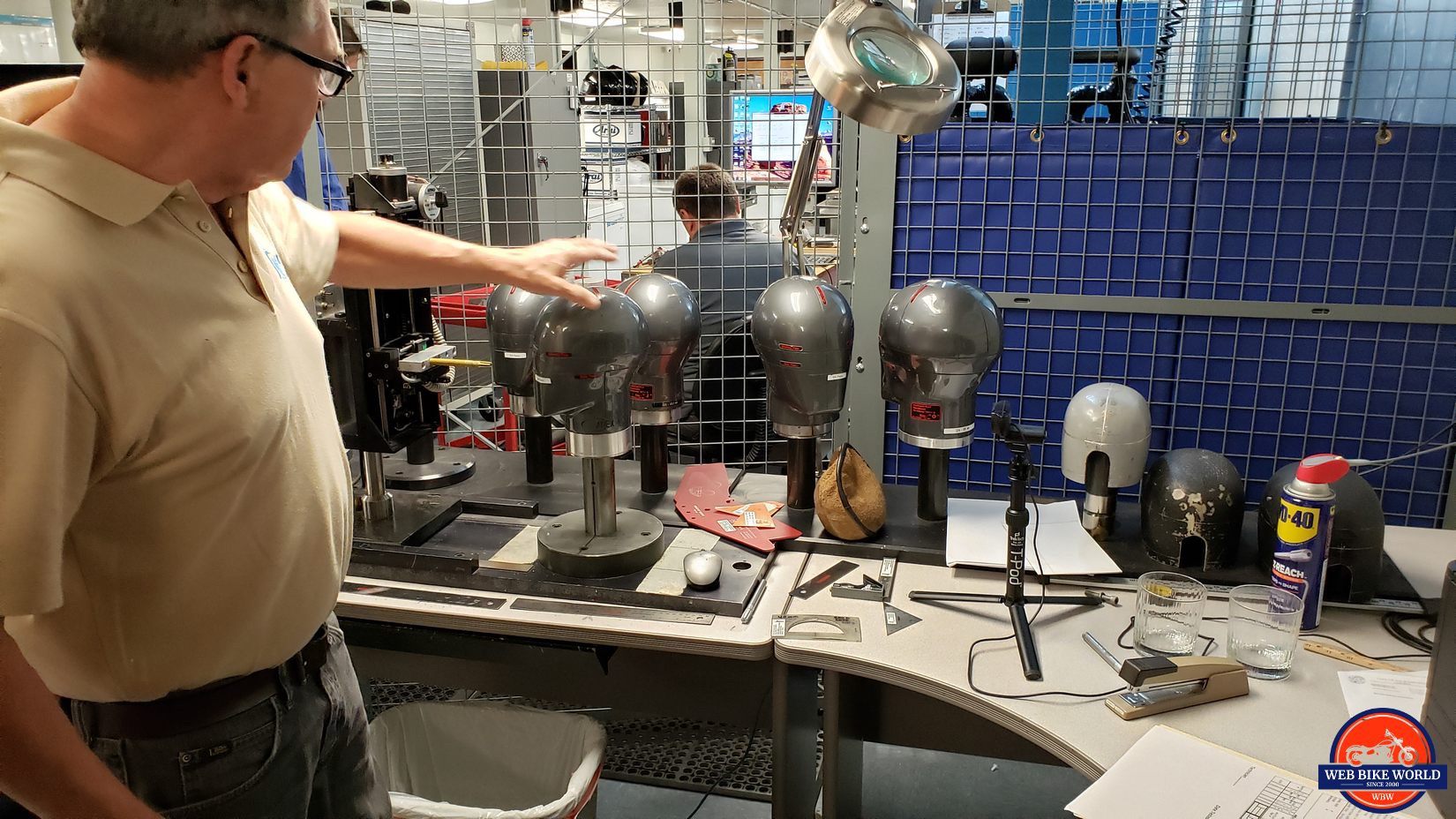

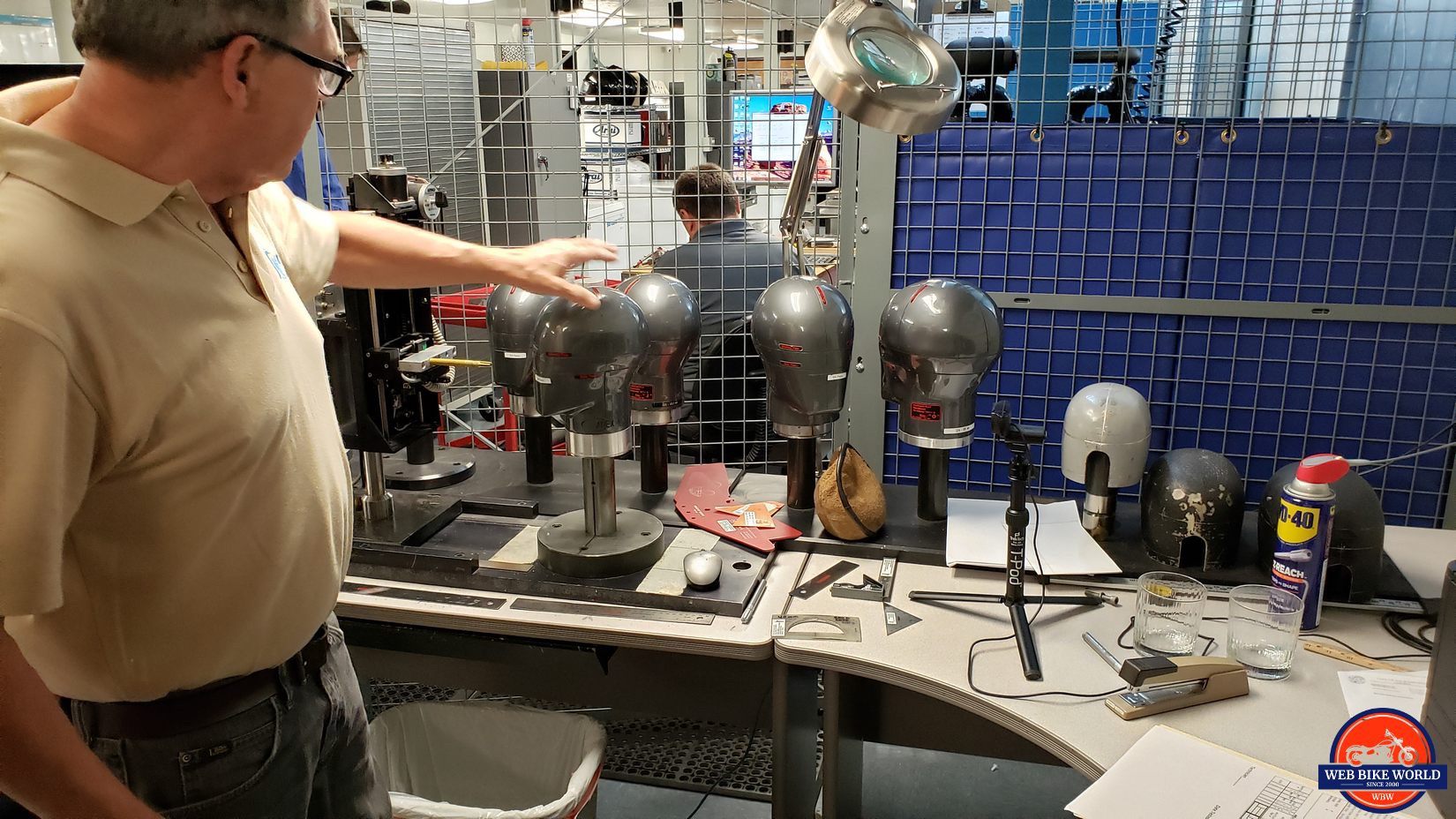

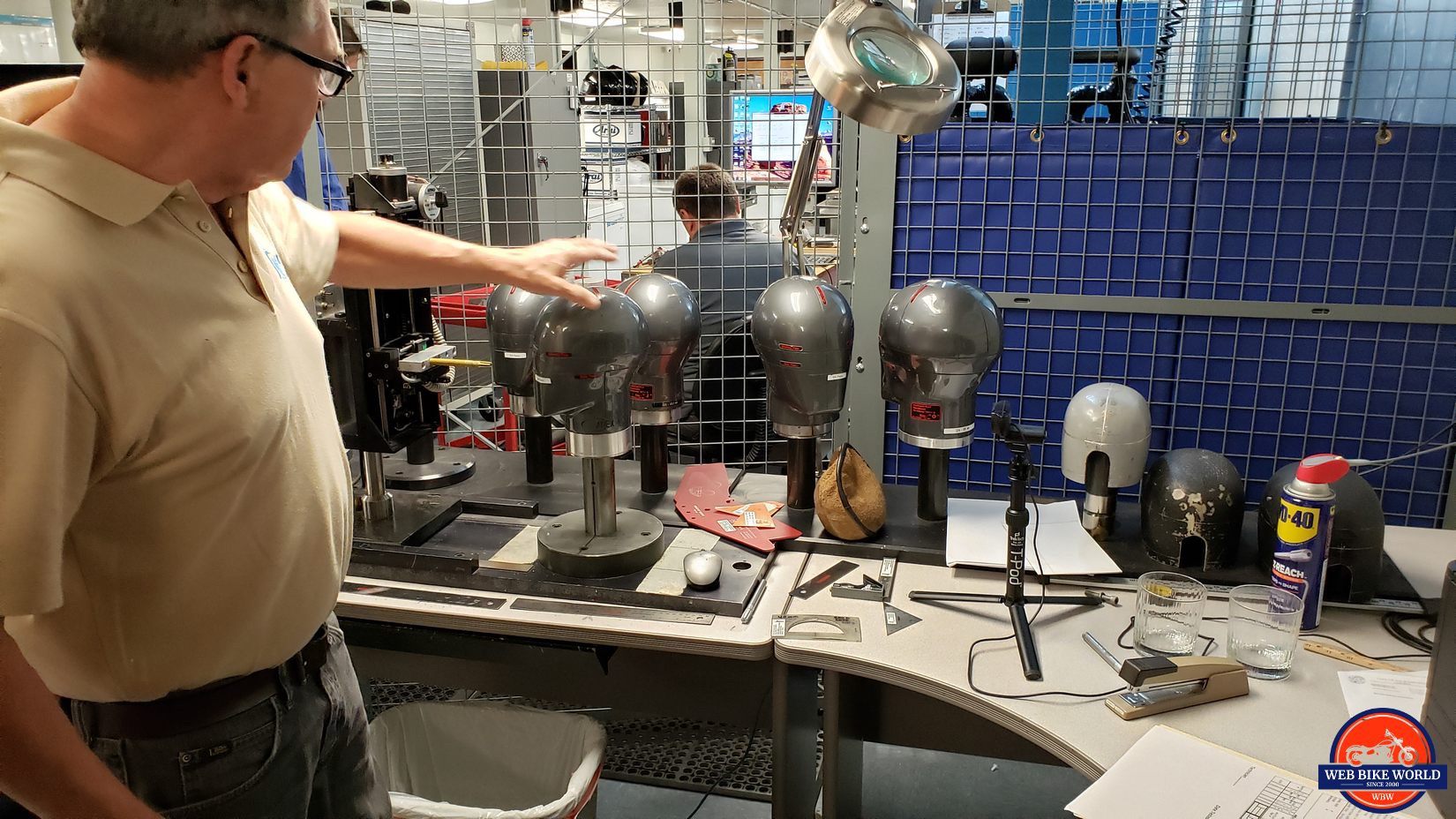

Watch this quick video to show two different methods the people at MIPS have been using to measure rotational force in their labs for some time now.

Measuring Rotational Forces?

Up until recently, the procedures used to measure rotational force were questionable to Snell’s leadership team. They felt the methods and equipment being used weren’t reliably consistent.

Now that improved sensors and procedures have come forth from ECE 22.06 and FIM, Snell has obtained the same reliable equipment and is experimenting with the test criteria for measuring rotational forces. They still aren’t yet completely at peace with what the numbers ECE, FIM, and other testing organizations are relying on to measure those forces mean, though.

Snell—being the inquisitive bunch they are—has been looking to poke holes in the testing data and methodology in order to condemn or confirm it.

For this reason, they began experimenting with anti-rotational force devices found in many popular motorcycle helmets. MIPS is likely the oldest and most effective countermeasure system available currently, so they began experimenting with helmets equipped with that famous, slippery, yellow liner installed in them.

A Potential Link Between MIPS & Long Hair?

They say that with the usual headforms (manufactured testing heads), which are bald, the MIPS system worked as advertised. The people at Snell were impressed with the range of measured energy reduction in these MIPS-equipped helmets (ranging from 15% to 50%).

That’s impressive, but since most motorcyclists don’t have bald heads made of metal as used in ECE 22.06 testing, Snell decided to throw a curveball into the equation. They installed a wig with hair 4 to 6 inches in length on the headform and repeated the oblique test to see what would happen.

Their claimed result? Having hair that long on the headform all but nullified the MIPS liner’s effect on limiting rotational force. They think the MIPS liner was compromised by the natural slippage produced by the layer of long hair.

More interesting to note: they found that having the wig itself on the headform acted a bit like a less effective natural anti-rotational device when they tried it in a non-MIPS-equipped helmet.

Snell hasn’t officially published these findings and cautioned me against taking the results of this experiment as absolute fact. They only had five to eight helmets to do their testing with and would prefer to repeat these results in two or three more helmet samples before deciding this is the truth about MIPS (and other similar anti-rotational technology).

What Kind of Headform Is Best for Testing?

Snell also claims that the type of experimental headforms used in the testing could affect the amount of rotational force measured during oblique testing.

They say some of the headforms used by FIM, in particular, are “extra sticky”, thanks to different silicones and rubber coatings used on them, which are meant to simulate human skin. These coatings appeared to be skewing the numbers during oblique testing from what Snell found in their experiments.

When the Snell testing numbers were compared to outside data measured using a cadaver head in a different lab, they determined the headforms were performing differently. The artificial skin appeared to be helping the MIPS system work better than it would with real skin, due to it creating a better bond between the MIPS liner and the headform.

MIPS Responds

These results were difficult for me to interpret, so I reached out to MIPS directly for a possible explanation.

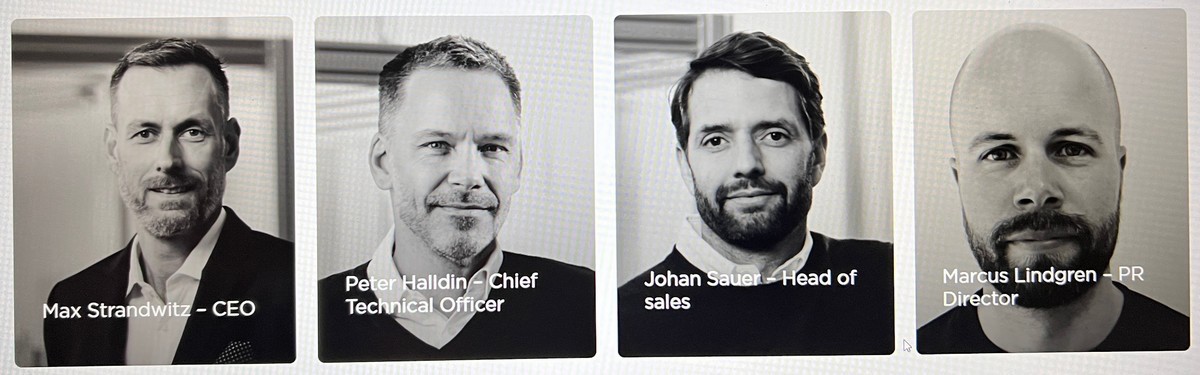

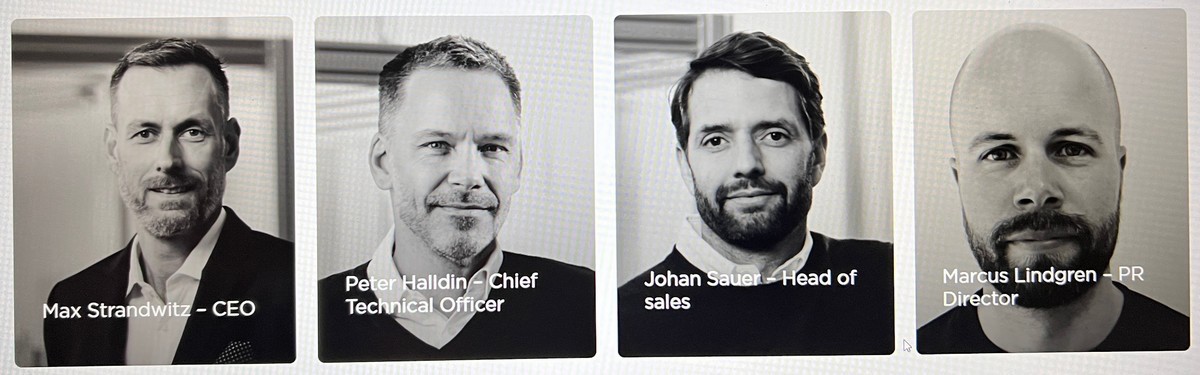

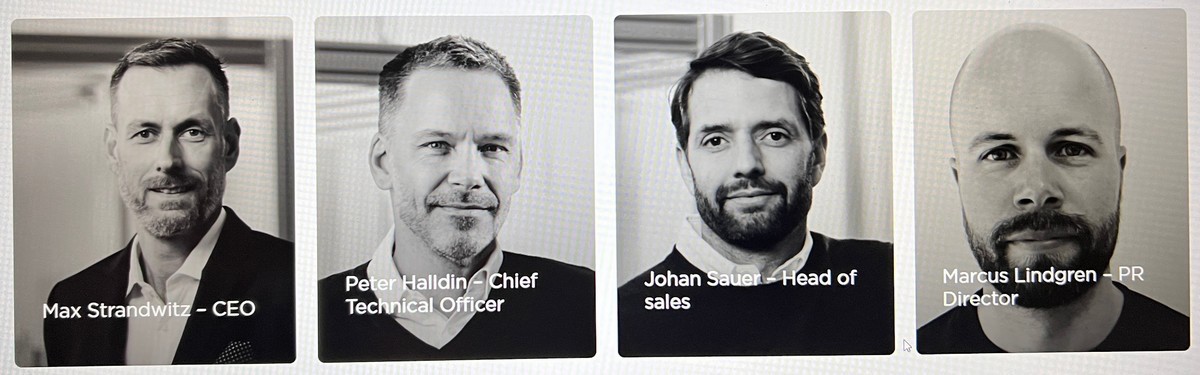

I was thrilled to spend time with MIPS’ Chief Technical Officer and Co-Founder Peter Halldin, and Marcus Lindgren, their PR director, on a Zoom call to discuss Snell’s MIPS testing.

Wigs, Bandanas, & Liners All Help Protect Brains

Peter explained to me that MIPS has also conducted experiments using wigs and other head coverings commonly worn by riders under their helmets. MIPS has compared their results against computer-based models based on cadaver heads with pressurized blood vessels to better simulate a real living person’s anatomy and ensure the most accurate results possible.

MIPS concurred long hair and other head coverings did somewhat reduce rotational forces in non-MIPS helmets but found MIPS-equipped helmets still worked as they should regardless of having wigs on the headforms.

Peter said their findings were recently confirmed by an independent Canadian research group out of Vancouver, BC, which publishes on the meaforensic.com website.

Last year, Stephanie Bonin presented a study at the IRCOBI conference in Europe where she performed tests using a bare headform covered with a stocking and also with a wig. Her results showed a significant reduction of the measured head rotational kinematics using MIPS with or without a wig.

Mips & Snell Need to Talk

These surprising Snell findings were already known to Peter, but he didn’t have any way of explaining why Snell had come up with results so different than MIPS had. He has spoken with Snell representatives in the past, but not about these wig experiments specifically.

The good news is that since my discussion with Peter, a meeting has been scheduled between the Snell and MIPS teams to discuss these conflicting results.

What’s the Truth?

Either Snell has discovered something new about oblique testing that needs to be recognized by other organizations, or their unusual results are an anomaly. The data might even be derived from an error since Snell is relatively inexperienced with oblique testing.

I don’t know for sure, but I look forward to hearing what the true story is in the end.

Snell’s Contributions Still Count

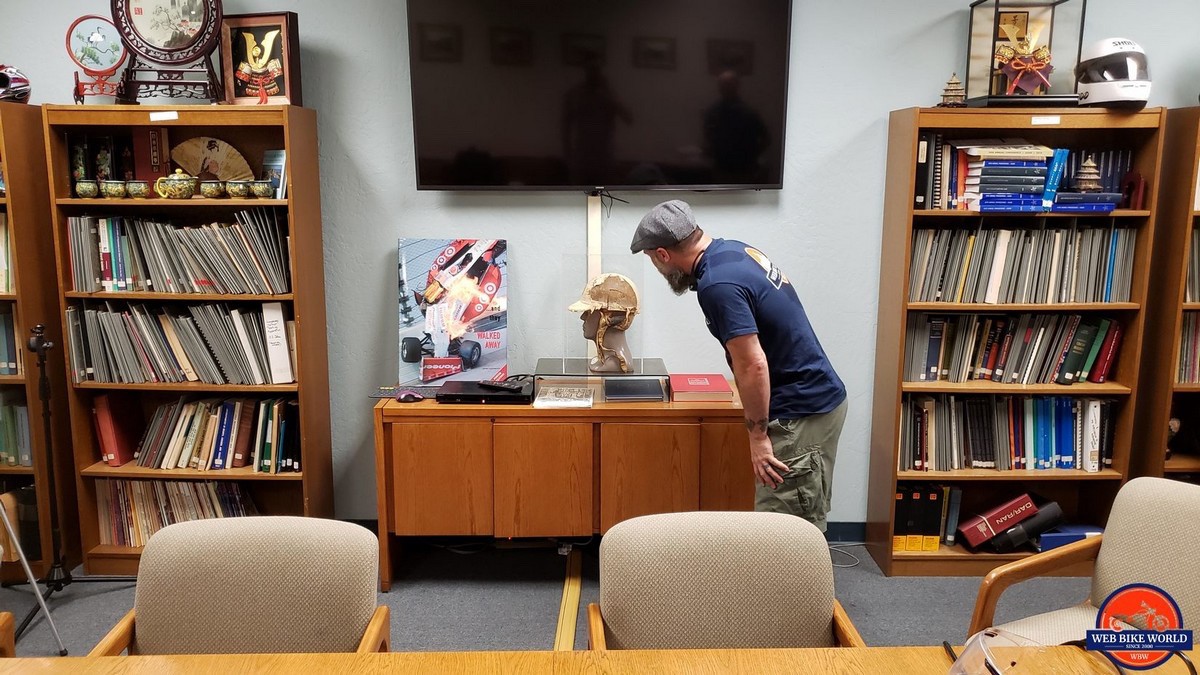

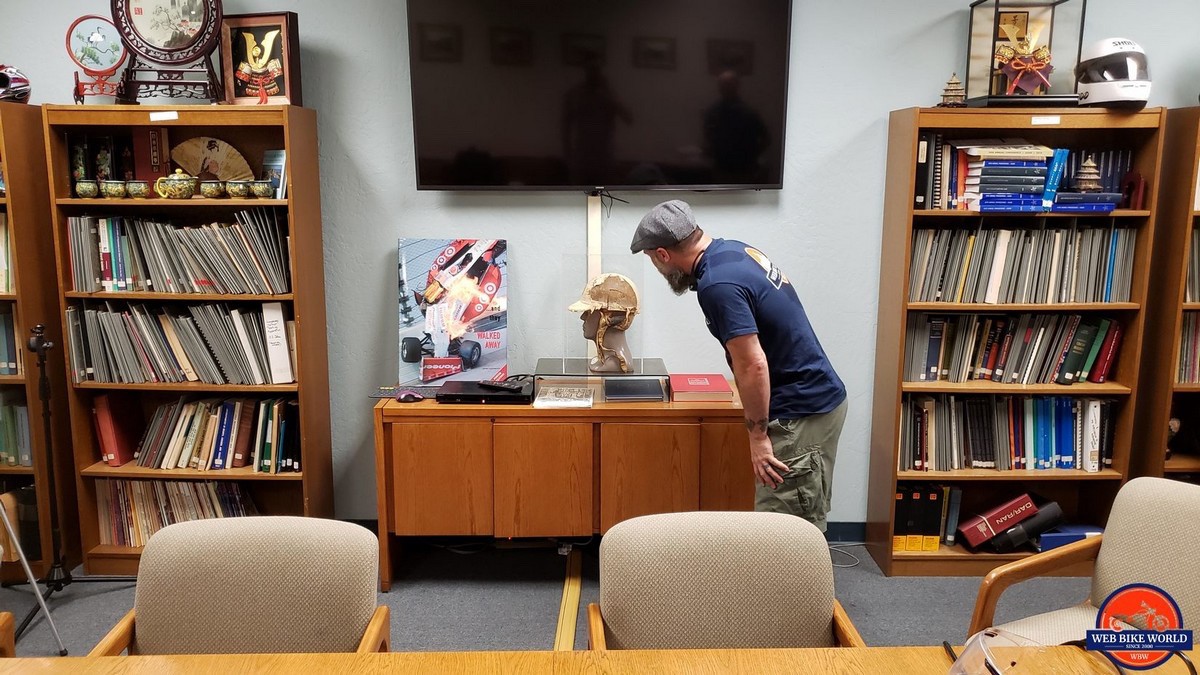

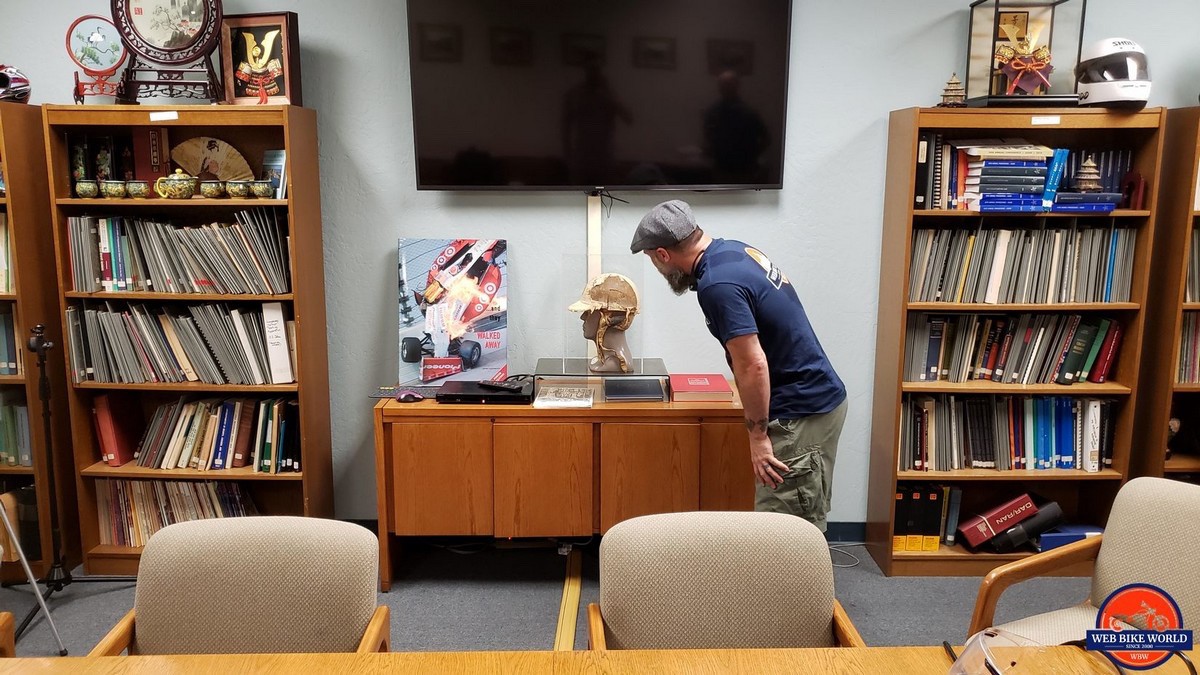

I visited the Snell Memorial Foundation in 2019 and came away impressed with the degree of thought behind their testing methods, attention to detail, and commitment to being a watchdog in the world of personal protection equipment. I hope someday that Ryan F9, Bradley Brownell, and their fans will do the same. They should get their information from the source directly instead of relying so much on a press release and a 14-year-old article.

Which Standard Is the Best?

Personally, I don’t believe any of the current testing standards are superior to others. It appears all are based on science, all provide good guidance to manufacturers, and all have different strengths to consider.

Wouldn’t the most value to motorcyclists be found in the combined wisdom of multiple reputable sources with differing views instead of trying to place one above all? I’d rather buy a helmet with several certification stickers on the back than just one. Wouldn’t you?

The good news is, it’s starting to be more common to see helmets earning multiple certifications.

The Bell Race Star DLX Flex (above) passes Snell, DOT, and ECE 22.05.

The Arai RX-7V (Corsair X) (above) passes Snell, DOT, ECE 22.05, and FIM criteria.

There’s also a new helmet being crowdfunded by a group out of Spokane, WA, called the VATA7 X1 Speed and Light LED Smart Helmet.

VATA7 boasts certification by Snell, DOT, ECE 22.06, JIS, and FIM for the X1 Speed and Light. I struggle to believe they can pass all those standards and have a helmet that weighs under 2lbs as they’re claiming. However, I sincerely hope they can. That would be a fantastic helmet!

The More Opinions the Better

Let’s also consider testing results from organizations like SHARP, Certimoov, and Crash if we truly want to be informed, buyers. I especially like the Crash website because they publish actual G force measurements recorded during their testing.

The Truth about Helmet Testing Isn’t Provocative or Clickbait

This sounds like common sense, but I’ve noticed some people can’t help choosing a side when it comes to helmet testing and cheering on their hero while vilifying the “enemy” or “opponent” of their perceived truth.

Is there truly an enemy or opponent if everyone has the same goal? The endgame of all these testing groups is identical: ensuring every safety hat on the market will provide the best degree of protection possible to the wearer.

This seems to be a classic case of choosing the best way to skin the proverbial cat… which wouldn’t be necessary if the different groups involved didn’t let egos and politics get in the way of collaboration.

A Non-Profit Organization

Lastly, I may be misinterpreting his message, but from my point of view, Ryan implied in his video that Snell compromised their integrity by creating M2020R, and (even worse) that their motive was simply to ensure cash keeps flowing their way. If that’s what he meant, it’s a low blow aimed at an organization I see as being frugal, bare-bones, and devoted to their cause.

The SMF only has 6 full-time employees as of this writing, and their lab is in Sacramento, not Beverly Hills. No, they’re in this race because their hearts are in the right place. Definitely, they need to cover the costs of their operation, but they could likely manage that by focusing exclusively on the North American head protection market.

Disagree with their methods if you must, Ryan, but attacking Snell’s raison d’être is off-side.

The Foundation is a memorial to the late William Snell, who long ago died tragically because there weren’t better quality helmet standards in place back in 1956. This is why Snell created M2020R—they genuinely want to continue adding gainfully to the cause they have always stood for, and so they adapted to remain relevant worldwide. That’s what every organization or person is supposed to do.

They talk the talk and walk the walk of people deserving respect.

During my video chat with the Snell team, I learned Ed Becker had retired over a year previously, but he attended our meeting voluntarily to add to the discussion about Snell’s work and focus.

Stay Classy, Snell Memorial Foundation

I wholeheartedly agree that industry experts should be challenged to defend their position, but it should be done by the media, and influencers who are motivated to seek truth—as opposed to views and subscribers.

- Jim